Survey: classifying AI systems used in response to the COVID-19 pandemic

The Future Society, with support from the Global Partnership for AI (GPAI) and the OECD, has launched a survey for people involved in developing AI systems that were used in response to the COVID-19 pandemic.

If you want to participate, please complete this survey by August 2nd, 2021 AoE.

This includes AI systems launched during the pandemic as well as repurposed pre-existing systems. The survey is based on the OECD Framework for Classification of AI Systems; we expect it will take 15-25 minutes to complete. Data collected via this survey will be shared on an open-access, GPAI-labeled repository. One to three of the most promising classified AI systems—in terms of impact, practicality, and scalability—will be selected by the GPAI AI & Pandemic Response Subgroup for support in some form by GPAI. We would be grateful if you would share this invitation with those for whom the survey may be relevant.

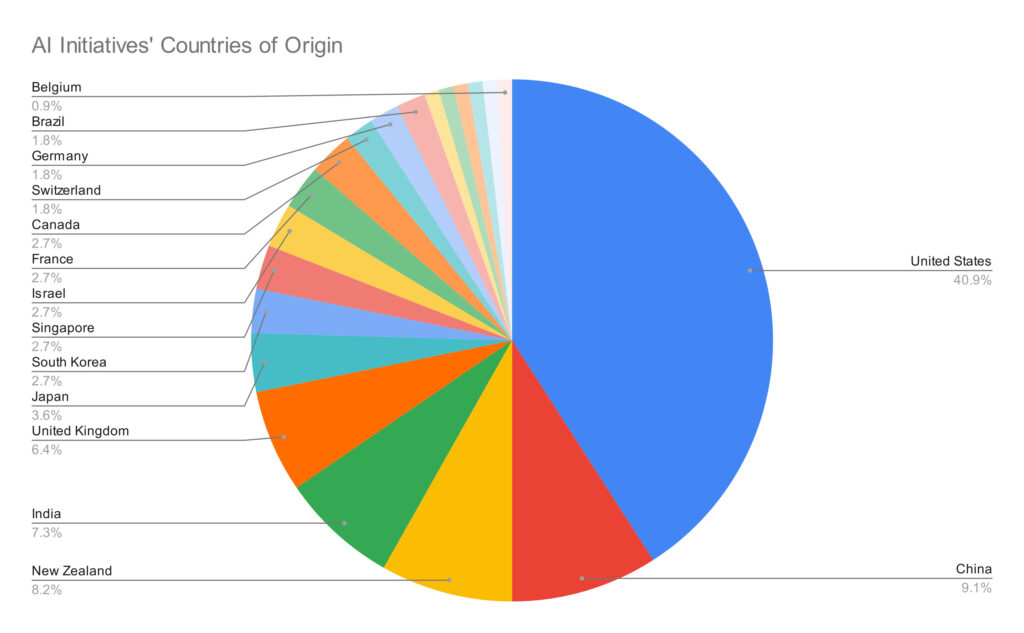

Last year, The Future Society, with support from the GPAI AI & Pandemic Response Subgroup, conducted an analysis of emerging AI “initiatives”, including AI systems, governance mechanisms, ethical/policy frameworks, and platforms to fast-track research and to crowdsource projects that focus on responding to the COVID-19 pandemic.

In our findings, published in Responsible AI in Pandemic Response, we overview the initiatives’ key enabling factors: characteristics that underlie their present and likely future success, such as their organizational architecture, credibility, innovativeness, and implementation of adaptive strategies. The key enabling factors that emerged included the following: the implementation of open science; cross-sectoral and interdisciplinary collaboration; acceleration and refinement of logistical processes that faced exceptional strain during the pandemic; and the capability of being reused or repurposed in future pandemics. We also presented common challenges that initiatives faced, including time-consuming procedures to establish compliance with existing data protection and privacy regulations; a lack of reliable access to appropriate datasets; and heightened public concern and scrutiny around the use and collection of sensitive healthcare data.

To respond to the extraordinary circumstances of the pandemic, we concluded our report with several recommendations for the AI & Pandemic Response Subgroup, including:

- Co-shaping a Global Health Data Governance Framework, with other authoritative multilateral institutions, which will facilitate the development of AI tools that utilize medical data for drug discovery and clinical treatment;

- Supporting the development of a centralized portal for the advancement of cross-sectoral and interdisciplinary research;

- Addressing existing social acceptability challenges that hinder public and medical adoption of AI tools and applications;

- Setting up agile task forces that traverse GPAI working groups and respond to immediate needs and challenges.

In June 2021, acting upon Recommendations 2 and 4, the Subgroup formed a Project Steering Group to launch the AI-Powered Immediate Response to Pandemics project, with the goal of “directly [supporting] impactful and practical AI initiatives to help in the fight against the COVID-19 pandemic.” The Future Society is honoured to have been selected as a partner in this project to produce a “living repository” (an open-access, regularly updated dataset) of AI initiatives developed and used in the context of the COVID-19 pandemic.

To aid in our information-gathering efforts, starting today, we are inviting those associated with the development of AI systems used in the COVID-19 pandemic response to complete this survey by August 2nd, 2021 AoE. In addition to crowdsourcing input via this survey, our analysts have begun classifying systems identified in last year’s report. The metrics we will use to analyze these systems include those delineated in the OECD Framework for the Classification of AI Systems. They also incorporate additional metrics devised for this particular use case with the Project Steering Group. Beginning August 2021, Subgroup members will utilize this repository to select one to three of the most promising initiatives—in terms of impact, practicality, and scalability—to be supported by GPAI.

The benefits of this exercise are three-fold. First, the living repository will facilitate knowledge-sharing of the AI systems used in pandemic response and allow the research community to gauge the dynamic landscape of AI systems developed for this use case. Second, by mobilizing their resources behind several promising initiatives, we believe that the Subgroup will be able to substantially help these initiatives scale up their impact. Third, by integrating the recently developed OECD Framework for the Classification of AI Systems into our survey, we will be able to support the OECD’s public consultation on their framework by testing its robustness with a large number of AI systems.

An existing challenge across the fields of AI governance, ethics, and safety is the lack of a common language to characterize AI systems. As a result, industry workers, researchers, policymakers, and regulators speak in incongruent terms. Whereas commercial AI developers focus on the scale and applications of their technology, their published materials often lack the technical details regarding systems’ data, model, task, and output. Conversely, while academic work explores the minutiae of models and experiments, it often includes jargon beyond layperson terms and lacks substantive exploration of the real-world adoption (and implications of adoption) of technologies. Classification will bridge this gap by building a unified vocabulary across these stakeholders.

We have decided to utilize the OECD Framework for the Classification of AI Systems as it is the most robust and authoritative classification framework to date. It deconstructs AI systems into four dimensions: context; data and input; the AI model; and task and output. Each of these dimensions is further broken down into concrete subdimensions to allow for more technical characterizations of AI systems, prompting questions such as: “Who are the system’s users? What degree of choice do they have? How are the data and input collected? Are they structured? How is the model built or trained? What kind of task does the system perform, and how autonomous are its actions?”

In the natural sciences, the classification of elements into periods, proteins into classes, and living things into kingdoms has helped humans understand their shared intrinsic characteristics and emergent properties in living systems. Similarly, classifying AI systems will allow us to develop knowledge of their intrinsic and emergent properties in technosocial environments. Research and policymaking communities will be better equipped to recognize, label, monitor, preempt, and prevent deleterious societal impacts. By constructing a common language between industry, academia, and policymakers, the process of classification will provide the scaffolding for safer, more ethical, and more governable technology.

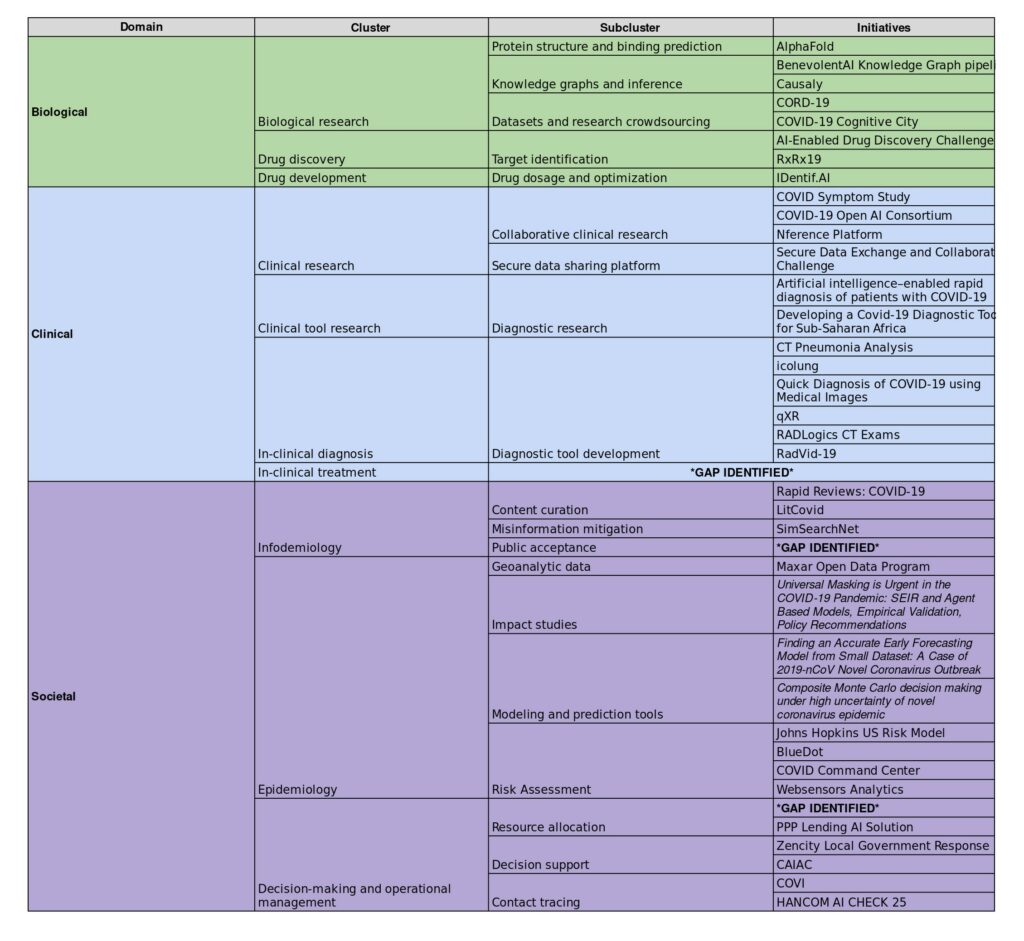

In the context of pandemic response, these classification efforts will allow us to intuit how AI systems could be used with minimal risk in clearly defined use cases. In last year’s research, we identified a number of these use cases, including protein structure and binding prediction, clinical diagnostics, and epidemiological modeling (read more on our clustering framework here). One and a half years into this crisis, there are still many ways in which these AI systems can mitigate the pandemic’s impact by preventing the spread of more dangerous SARS-CoV-2 variants, aiding in the identification and isolation of high-risk individuals, and supporting public health decision-making. We are hopeful that our research will elevate the impact of those AI systems that have the potential to stunt this pandemic and that were built with fairness, transparency, and safety in mind.

We are also encouraged by the longer-term potential of this research to aid in the prevention of future pandemics. We can imagine what the world might look like if the tools that exist today would be readily accessible at the outbreak of a future infectious disease: news-scraping tools could flag hot spots within days; contact-tracing systems could identify potential carriers and help them isolate themselves; and automated calling systems could provide check-ins with high-risk individuals instantly in the comfort of their own homes. These are only a few ways that AI systems could support public health infrastructure to prevent infectious contagions from transforming into full-scale pandemics.

From drug discovery to clinical research, epidemiology modelling, misinformation mitigation, and logistical management, AI systems have the potential to improve our ability to respond to public health crises. When the next infectious disease is identified and eradicated quickly, we can thank the researchers, policymakers, public health professionals and AI systems developers who have used the lessons from the COVID-19 pandemic to build more resilient public health infrastructures.

Other contributors to this work include Michael O’Sullivan, Paul Suetens, Sacha Alanoca, Kristy Loke, Bruno Kunzler, Abdijabar Mohamed, Lewis Hammond, and Neda Pourreza.